Quadtree Convolutional Neural Networks

Abstract

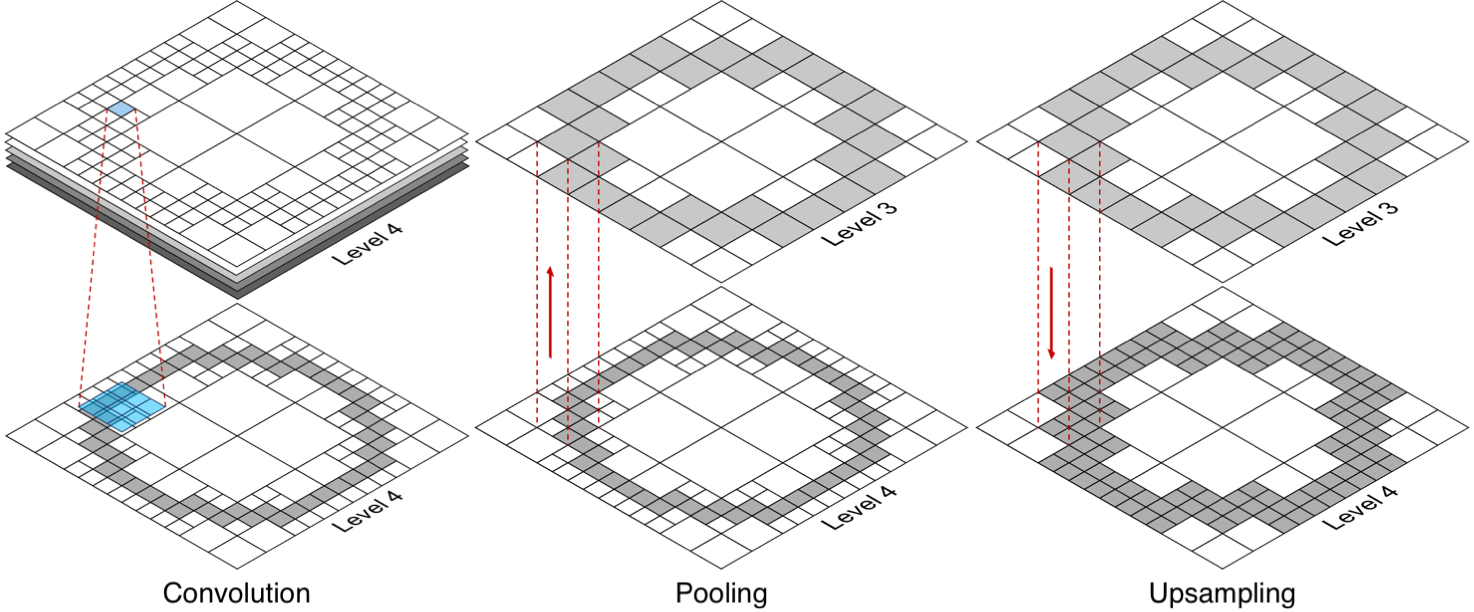

This paper presents a Quadtree Convolutional Neural Network (QCNN) for efficiently learning from image datasets representing sparse data such as handwriting, pen strokes, freehand sketches, etc. Instead of storing the sparse sketches in regular dense tensors, our method decomposes and represents the image as a linear quadtree that is only refined in the non-empty portions of the image. The actual image data corresponding to non-zero pixels is stored in the finest nodes of the quadtree. Convolution and pooling operations are restricted to the sparse pixels, leading to better efficiency in computation time as well as memory usage. Specifically, the computational and memory costs in QCNN grow linearly in the number of non-zero pixels, as opposed to traditional CNNs where the costs are quadratic in the number of pixels. This enables QCNN to learn from sparse images much faster and process high resolution images without the memory constraints faced by traditional CNNs. We study QCNN on four sparse image datasets for sketch classification and simplification tasks. The results show that QCNN can obtain comparable accuracy with large reduction in computational and memory costs.